By Hervé Legenvre and Erkko Autio

This article is part five of an ongoing series – The AI Power Plays – that explores the fiercely competitive AI landscape, where tech giants and startups battle for dominance while navigating the delicate balance of competition and collaboration to stay at the cutting edge of AI innovation.

This article examines how Hugging Face has emerged as a linchpin in the AI landscape through its innovative community-centric business model and seamless integration of AI models, datasets, frameworks, hardware, and cloud platforms. By fostering open-source collaboration and building partnerships with major technology providers, Hugging Face enables developers, researchers, and enterprises to co-create, share, and scale AI solutions efficiently.

The history of Hugging Face

Hugging Face was established in New York City, in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf. As they wanted to explore state-of-the-art natural language processing (NLP) technology, they created an AI-based chatbot for teenagers. From this, they expanded their focus to developing tools and resources to advance AI, particularly in NLP. By 2018, Hugging Face expanded its focus to creating open-source tools that democratize access to AI models and released the Transformers library, a toolkit that made it easier to use and fine-tune transformer-based language models (like BERT, GPT, and others) for various NLP tasks. This sparked the emergence of a community of developers that used this library and the tools added by Hugging Face, whose mission had by now consolidated around democratizing artificial intelligence through open-source contributions. Today, Hugging Face has received investments from Google, Amazon, Nvidia, AMD, Intel, IBM, Qualcomm, and others. The company maintains a repository of over 1,2 million pre-trained models for a variety of AI tasks. These models can be used with little configuration, which reduces the complexity of building AI solutions from scratch with extensive resources.

Hugging Face: a linchpin in the AI landscape

Today, Hugging Face has established itself as a linchpin in the AI landscape. The company facilitates the seamless integration of technological capabilities, it fosters community collaboration, and it acts as a catalyst for innovation. It empowers organizations, developers, and researchers to co-create and use pre-trained models and a variety of tools they use to create AI applications.

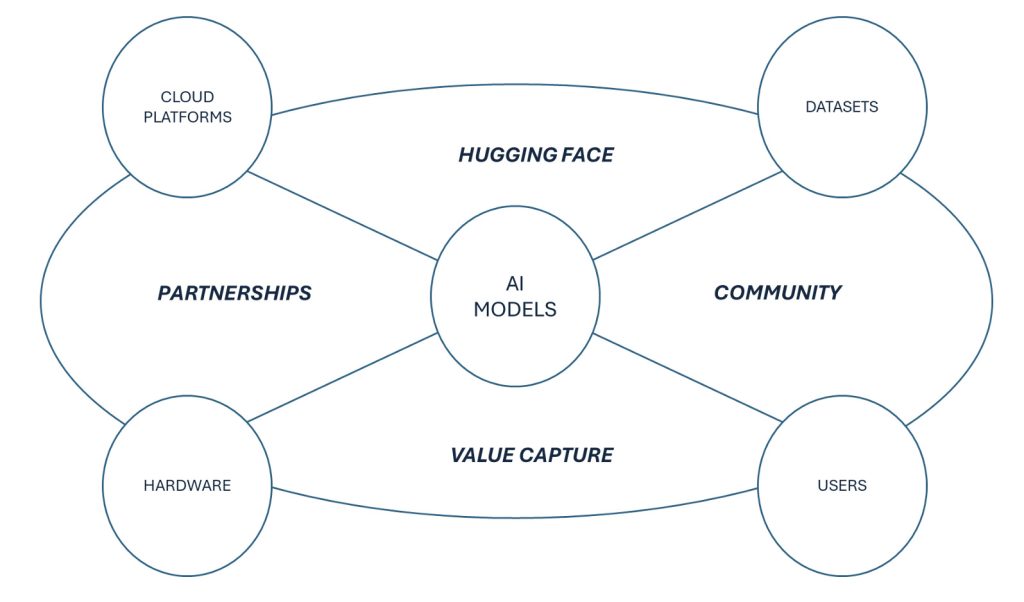

The platform creates seamless connection and integration between AI models, datasets, cloud platforms, and hardware, thus accelerating the adoption and deployment of AI solutions.

Figure 1: Hugging Face – a linchpin for the AI landscape

On the Hugging Face platform, the Model Hub is the central repository for hosting, sharing, selecting, and accessing pre-trained models across different domains (e.g., NLP, computer vision, audio processing, and multimodal tasks). Hugging Face facilitates the connection between AI models and thousands of datasets, enabling these datasets to be pre-processed with just a few lines of code before being used to train and fine-tune AI models for specific use cases.

Hugging Face integrates AI models with popular deep learning frameworks such as PyTorch and TensorFlow. This integration simplifies the use of machine learning models and offers developers and researchers the flexibility to work within their preferred framework. The platform thus eliminates the complexity associated with running AI models across different frameworks. These integrations are the result of Hugging Face’s collaboration with PyTorch (Meta) and TensorFlow (Google) teams and related contributions from the open-source community.

Hugging Face also facilitates the integration of models with diverse cloud platforms to enable scalable training, deployment, and ‘running the model’. Major cloud providers are keen to simplify user’s access to their infrastructure, and they therefore partner with Hugging Face to ensure that AI workflows can be executed efficiently on their platform.

Finally, the same collaboration and integration logic also applies to hardware providers. These collaborations and integrations ensure that AI models run optimally on specific hardware configurations, making them faster and more scalable. Hardware providers are therefore keen to support Hugging Face so AI workflows can be executed efficiently on their hardware.

Hugging Face serves as a linchpin in the AI ecosystem because it sits at the intersection of AI models, datasets, frameworks, hardware, and cloud platforms, creating a cohesive environment where these technologies can work together seamlessly. Key technology providers—such as NVIDIA, AMD, Google, AWS, Meta and others—have a vested interest in ensuring that their tools, hardware, and platforms integrate effortlessly with Hugging Face’s offerings. This integration not only enhances the usability of their technology but also provides them with access to Hugging Face’s vast and engaged community of developers, researchers, and enterprises. By fostering interoperability and collaboration, Hugging Face helps technology providers amplify their reach while empowering users to build, deploy, and scale AI solutions faster and more effectively. This unique position makes Hugging Face an indispensable linchpin in the AI community and facilitate the evolution of the entire AI landscape.

How does Hugging Face capture value?

Its strong open-source ethos is the reason why Hugging Face has been able to establish itself as a linchpin in the AI community – yet, this ethos also means that Hugging Face needs a business model that balances community collaboration with monetization. Accordingly, its primary revenue streams derive from subscription-based enterprise offerings, advanced tools, and partnerships.

Access to the Hugging Face Hub remains free for individual developers. Yet, it also offers paid Pro and Enterprise Plans for businesses. These plans provide private model hosting, advanced collaboration tools, and integration with cloud platforms to enable business customers to build AI projects while protecting their proprietary assets. Hugging Face has also created advanced proprietary tools, offered at a prize, that can be used to train and access pre-trained AI models.

Hugging Face also generates revenue from partnerships with major cloud providers, including AWS, Microsoft Azure, and Google Cloud, which further expand Hugging Face’s reach. These partnerships facilitate seamless integrations and related revenue-sharing opportunities, enabling enterprises to more effectively deploy Hugging Face’s tools on their platforms.

This combination of paid and open-source offerings ensures that Hugging Face captures value while continuing to democratize AI through open-source innovation and OSS community-driven progress.

Hugging Face’s community-centric business model

Hugging Face’s community-centric business model casts various AI community stakeholders—developers, researchers, contributors, technology providers and others—as active co-creators of shared open-source resources rather than passive consumers of Hugging Face’s offerings. By fostering shared value creation and collective engagement, community-centric open-source business models empower their communities to drive innovation while sustaining long-term growth within the developer community. This business model builds its success on five key success factors:

- Shared Purpose: Hugging Face unites the AI developer community around a common purpose: advancing the frontiers of AI through collaboration and open-source ethos. By offering access to tools like the Transformers library, Hugging Face aligns academic researchers, businesses, and developers with this share purpose. This helps foster a sense of ownership and motivates community members to contribute and engage meaningfully.

- Delivering Tangible Value: Hugging Face provides a platform for accessing, sharing, and deploying machine learning models and datasets. It also ensures seamless connectivity and compatibility multiple AI resources. By lowering barriers to entry, the Hugging Face platform empowers developers to focus on building innovative solutions and use cases instead of duplicating foundational work. This ability to build on the contributions of others helps deliver immediate, tangible value and encourage continued engagement and adoption.

- Recognition: Hugging Face ensures that contributors receive meaningful recognition for their participation. Users who upload pre-trained models, datasets, or who make other contributions gain visibility and recognition within the Hugging Face community. This community recognition helps maintain a positive dynamic where contributors’ efforts are acknowledged and amplified while enriching the overall ecosystem and its shared resources.

- Collective and decentralized innovation: Hugging Face harnesses the collective intelligence of its community while encouraging decentralized innovation. By empowering developers to actively shape opens-source tools and technologies, the platform remains responsive to emerging trends. This adaptability allows Hugging Face to remain the community of choice for developers and researchers in the rapidly evolving AI landscape.

- Trust Through Transparency: Transparency is a cornerstone of Hugging Face’s success. Open governance, clear and transparent decision-making and related rules, and transparent resource allocation foster trust and accountability. By aligning stakeholders with the platform’s mission, Hugging Face builds trust and encourages long-term participation and collaboration.

Hugging Face’s ability to integrate these principles demonstrates the power of community-centric open-source business models. By uniting its community around shared purpose, delivering tools that create tangible value, and fostering trust, Hugging Face has built a self-sustaining developer ecosystem to help drive progress in AI.

Previously in the AI Power Play series

- Why Meta is Positioning Itself as the Champion of Open-Source AI

- Why is OpenAI Moving Towards a Closed Source Strategy?

- Google and AI: The Tech Leader That Had a Perfect AI Plan Until November 2022

- NVIDIA: Harnessing Open Innovation to Promote User Lock-in

Coming next in the AI Power Play series:

- The forces shaping AI open-source dynamics

Hervé Legenvre

Hervé Legenvre