By Kim E. van Oorschot, Luk N. Van Wassenhove, Kishore Sengupta & Henk Akkermans

Research shows that project managers continuously prioritised good vibes (positive, but subjective signals) over bad news (negative, but objective signals), which resulted in decisions of poor quality. Without understanding the root causes that generate the bad news and the good vibes, managers could make the wrong decisions.

1. Introduction

Managers are frequently surprised by problems in complex new product development projects (Browning and Ramasesh, 2015). Often these surprises come from “unknown-unknowns”: the things we don’t know we don’t know. In response, many tools have been developed to help managers discover these unknown unknowns: to turn them into known unknowns to which risk management procedures can be applied. These tools are both hard and soft, targeting both the analytical, mechanical side of the project, and the behavioural, organic side. Examples of the former are: checklists, decomposition, scenario analysis (Browning and Ramasesh, 2015) and stage gates (Cooper, 2008). The latter includes: informal dialogues with project sponsors (Kloppenborg and Tesch, 2015), building a wide range of experiential expertise (Browning and Ramasesh, 2015), frequent communication (Laufer et al., 2015), help seeking (Sting et al., 2015), and focusing on weak signals (Schoemaker and Day, 2009).

What all these tools have in common is that they aim for clarity in projects that follow an uncertain path through foggy and shifting markets and technologies (Eisenhardt and Tabrizi, 1995). The price to pay for this is more information that needs to be processed by the project manager. Project managers need to make sense of all sorts of signals, whether they come from analytical tools or informal dialogues and communication, whether they are strong or weak, positive or negative, objective or subjective. Eventually, all signals need to be merged to make project decisions. Yet, little is known about how project managers actually make sense of a multitude of (often mixed) signals.

[ms-protect-content id=”9932″]2. Information Overload

Managers operating in fast-paced and demanding environments face the dilemma that numerous factors and options surround every decision (Seo and Barrett, 2007). They must make decisions against a background of ambiguous information, high-speed change and a lack of ability to verify all facts (Bourgeois and Eisenhardt, 1988; Oliver and Roos, 2005). This makes it extremely difficult or even impossible to make an optimal decision within a limited time frame. Information overload occurs because either too many messages are delivered and it appears impossible to respond to them adequately or incoming messages are not sufficiently organised to be easily recognised (Antioco et al., 2008). To prevent overload, in practice, people “satisfice” or seek solutions that seem good enough in a given situation, making decisions that are – at best – based on bounded rationality (Oliver and Roos, 2005); i.e. “the limits upon the ability of human beings to adapt optimally, or even satisfactorily, to complex environments.” (p. 132, Simon, 1991). Information overload and bounded rationality force decision-makers to use heuristics, “standard rules that implicitly direct our judgment [and] serve as a mechanism for coping with the complex environments surrounding our decisions.” (p. 6, Bazerman, 1994).

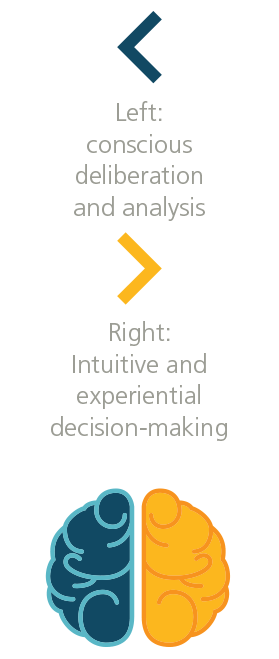

Previous research shows that decision-making improves when both the right and left side of the brain are used (Seo and Barrett, 2007; Oliver and Roos, 2005). The right side has been referred to as information processing system 1 (Kahneman, 2003). System 1 is rapid. This process is non-conscious, with important parallels to perceptual processes and linked to intuitive and experiential decision-making (Dane and Pratt, 2007). The right side reads between the lines, uses intangible information, gut feeling and previous experiences. The left side, system 2, uses the facts: tangible, objective, and measurable information. This is the slower system and involves conscious deliberation and analysis (Dane and Pratt, 2007; Fenton-O’Creevy et al., 2011). Using both sides not only improves the speed of the decision making process, but also the quality of the decision (Damasio, 1994).

Previous research shows that decision-making improves when both the right and left side of the brain are used (Seo and Barrett, 2007; Oliver and Roos, 2005). The right side has been referred to as information processing system 1 (Kahneman, 2003). System 1 is rapid. This process is non-conscious, with important parallels to perceptual processes and linked to intuitive and experiential decision-making (Dane and Pratt, 2007). The right side reads between the lines, uses intangible information, gut feeling and previous experiences. The left side, system 2, uses the facts: tangible, objective, and measurable information. This is the slower system and involves conscious deliberation and analysis (Dane and Pratt, 2007; Fenton-O’Creevy et al., 2011). Using both sides not only improves the speed of the decision making process, but also the quality of the decision (Damasio, 1994).

To reap the full benefits of the rapid information processing system, and to prevent the potentially harmful biases that can be induced by this system (Dane and Pratt, 2007; Fenton-O’Creevy et al., 2011; Seo and Barrett, 2007), intuition and gut feelings need to be attributed to correct causes (Forgas and Ciarrochi, 2002; Seo and Barrett, 2007; Schwarz and Clore, 1983). However, for managerial intuition to be effective, years of experience in problem solving is required (Dane and Pratt, 2007; Fenton-O’Creevy et al., 2011; Khatri and Ng, 2000). Research has tended to characterise intuition as integral to expert performance (Dreyfus and Dreyfus, 2005). Experts seem to be better calibrated and less overconfident than novices (Koehler et al., 2002; Tsai et al., 2008). Experts are accurate and quick to make decisions in ill-structured situations because they use their experience to recognise a situation, and then make decisions that have worked previously. Over time, experts increasingly use their accumulated knowledge to make decisions and take advantage of their prior knowledge (Gonzalez and Quesada, 2003; Gonzalez et al., 2003).

But, experience also has a darker side. It introduces the possibility of biases (Sleesman et al., 2012; Staw, 1981). Furthermore, people tend to access previous knowledge that bears surface (or what is most accessible in memory), rather than structural (what is more useful), similarity to the problem at hand (Thompson et al., 2000). In a stable decision making context, it is expected that eventually decision makers, by mere repetition, will learn what is useful and what not. However, in a complex and changing context, experience is often a poor teacher. Even highly capable individuals are confused by the difficulties of using small samples of ambiguous experience to interpret complex worlds (Levinthal and March, 1993). Especially in dynamically complex environments, characterised by a large number of interacting components that feedback to influence themselves, learning can break down and lead to the experience trap (Diehl and Sterman, 1995; Sengupta et al., 2008). As a result, individuals can create their own potentially inaccurate beliefs, based on subjective interpretations of events (Lapré and Van Wassenhove, 2003; Staats et al., 2015).

When learning breaks down, we cannot trust our gut feeling that is based on previous experiences. As such, we expect that when decision makers use both system 1 (gut feeling) and 2 (rational) to process large amounts of information in complex dynamic situations, like new product development projects, the quality of their decisions will suffer. Over time, poor quality of decisions will lead to problems in the project. These problems will generate more information that needs to be processed, which forces managers to rely even more on system 1, thereby only making matters worse. Although some project problems may indeed have been caused by unknown unknowns (Browning and Ramasesh, 2015), it is likely that some project problems are generated as side effects of project managers’ past decisions.

Through an in-depth study of a multimillion dollar new product development project in the semiconductor industry, we find that managers discuss both objective and subjective information before making decisions. However, due to the lack of time to process every piece of information, they rely more on subjective information. This becomes problematic in situations characterised by mixed signals of bad news (negative, measurable project facts) and good vibes (positive, subjective feelings). An example of such a mixed signal is: “the project is delayed with one week (bad news), but we have made great progress and team spirit is high (good vibe).” Without understanding the root causes that generate the bad news and the good vibes, managers can make the wrong decisions.

It took the project management team in our study over 80 weeks to realise that the project could not be realised within the given time, budget and quality constraints and the project was cancelled after spending over 20 million dollars. During these 80 weeks, the team was convinced that they could finish the project successfully. How could they make themselves and the steering committee believe this?

3. Feedback Loops of Information Processing

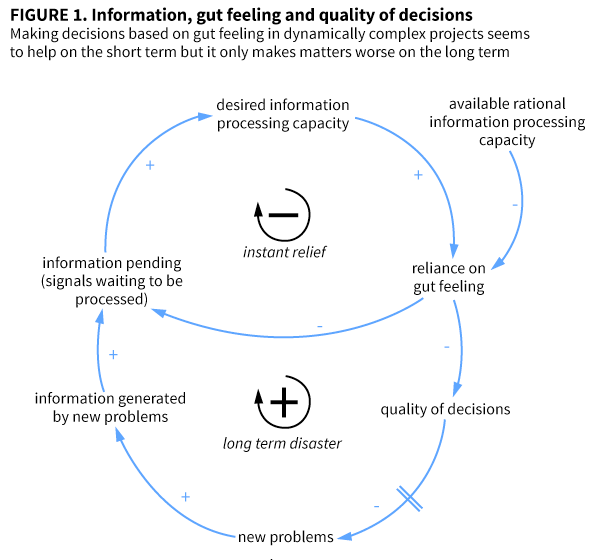

From analysing the discussions the team had during their weekly project management meetings, we find that the team repeatedly made the decision to postpone the due date of the current project phase, at the expense of the due date of the next phase, but not at the expense of the final project deadline. When these decisions were made, negative facts were outnumbered by positive feelings. The team seemed to give more weight to good vibes than to bad news. As such, the team members persuaded themselves that they could make up for current delays in the future, thereby enabling them to stick to the original deadline that was communicated to the customer. This decision to allow more time for the current phase gave instant relief. Therefore, this decision seemed to be good and helped to resolve a lot of the current scheduling problems in the project. The project seemed to be in control. This is expressed by a balancing feedback loop. (See “Information, Gut Feeling, and Quality of Decisions.”) This feedback loop describes that when managers need to process a lot of information, they do not have the capacity to analyse every signal rationally and as a result they have to rely on gut feeling. In doing so, they increase their ability to process information which reduces the number of pending information or signals waiting for a response.

However, when the next phase in the project approached, it became clear that the short time that was allocated to this next phase was not a solution but a huge problem. This problem created more information that needed to be processed, forcing the team to rely even more on gut feeling. This is reflected by a reinforcing feedback loop that spirals the system out of control. Gut feelings lead to decisions of poor quality which cause problems that increase the amount of information that needs to be processed. This only reinforces the need to use gut feeling in dealing with the information overload. (see figure 1 below)

4. Trusting gut feeling leads to poor decision quality

Our results indicate that managers discuss both objective and subjective information before making decisions. However, due to the lack of time to process every piece of information, they rely more on subjective information. This becomes tricky when the signals consist of a mix of bad news and good vibes. By not focusing on the root causes, managers could make wrong choices. Because these decisions seem to be good on the short-term, it takes a long time before they realise that the project is in trouble and that problems are aggravated instead of solved. When problems suddenly escalate, this may seem surprising, but these problems are simply a side effect of poor decisions made in the past, not by unknown unknowns that are usually blamed for project failures.

In dynamically complex projects, managers surround themselves by all sorts of Gantt charts, MS project files, tasks lists, tracking items, red flags, output charts, and resource profiles. It seems that the more complex the project is, the more information is desired. But more is not always better. Paradoxically, our findings indicate that the more information managers need to process in a short time, the more managers tend to rely on their gut feeling when making decisions. Our study shows that this can be very dangerous. Managers should not ignore their feelings (or the feelings of their team members). But, feelings should be handled with care when they influence decision making. When in the face of project problems, team members report how well they are working together, that they are making good progress, or that they are expecting to make good progress in the future, managers should be careful. Where do these statements come from? Is it sheer hope, or is the remark based on an actual understanding of how the dynamic system works? In the former, it is recommended to ignore the good vibe and stick to the facts, in the latter, it is better to acknowledge the vibe and use it as a decision facilitator (and as such, use gut feeling to make decisions). In case managers operate in a dynamically complex system that they fail to fully understand, it doesn’t mean that they are bound to make the wrong decisions. In these situations, managers should carefully consider what kind of information they need. For example, project managers could ask someone to tally the number of events that reflect good or bad news, and good or bad vibes, and then to plot these events over time. Such graphs provide deeper insight into the status of the project and project managers’ perceptions of this status and can serve as a starting point for an improved understanding of the situation which will lead to a better use of both facts and feelings about the project.

[/ms-protect-content]

About the Authors

Kim E. van Oorschot (kim.v.oorschot@bi.no) is a professor of project management at BI Norwegian Business School. She received her PhD in industrial engineering from Eindhoven University of Technology. Her research interests include decision making, trade-offs, and tipping points in dynamically complex settings such as new product development (NPD) projects.

Kim E. van Oorschot (kim.v.oorschot@bi.no) is a professor of project management at BI Norwegian Business School. She received her PhD in industrial engineering from Eindhoven University of Technology. Her research interests include decision making, trade-offs, and tipping points in dynamically complex settings such as new product development (NPD) projects.

Luk N. Van Wassenhove is the Henry Ford Chair of Manufacturing at INSEAD and a Fellow of POMS, EUROMA, and MSOM. He is also a Gold Medalist of EURO. His recent work focuses on sustainability (circular economy) and humanitarian operations.

Luk N. Van Wassenhove is the Henry Ford Chair of Manufacturing at INSEAD and a Fellow of POMS, EUROMA, and MSOM. He is also a Gold Medalist of EURO. His recent work focuses on sustainability (circular economy) and humanitarian operations.

Kishore Sengupta (kishore.sengupta@insead.edu) is a Reader in Operations at Cambridge Judge Business School, University of Cambridge. His research examines managerial behaviours in the execution of complex projects, and their consequences for the outcomes of such projects. His more recent work focuses on the role of stakeholders in large innovation projects.

Kishore Sengupta (kishore.sengupta@insead.edu) is a Reader in Operations at Cambridge Judge Business School, University of Cambridge. His research examines managerial behaviours in the execution of complex projects, and their consequences for the outcomes of such projects. His more recent work focuses on the role of stakeholders in large innovation projects.

Henk Akkermans is a professor of Supply Chain Management at Tilburg University and the director of the World Class Maintenance foundation in Breda, The Netherlands. His research addresses the issue of how inter-organisational supply chains and networks, where no single party exerts full control, can nevertheless effectively co-ordinate their behaviour.

Henk Akkermans is a professor of Supply Chain Management at Tilburg University and the director of the World Class Maintenance foundation in Breda, The Netherlands. His research addresses the issue of how inter-organisational supply chains and networks, where no single party exerts full control, can nevertheless effectively co-ordinate their behaviour.

References

• Antioco, M., Moenaert, R.K. and Lindgreen, A. (2008), “Reducing ongoing product design decision-making bias”, Journal of Product Innovation Management, Vol. 25, pp. 528-545.

• Bazerman, M.H. (1994), Judgment in managerial decision making, New York, Wiley.

• Bourgeois III, L.J. and Eisenhardt, K.M. (1988), “Strategic decision processes in high velocity environments: Four cases in the microcomputer industry”, Management Science, Vol. 34, pp. 737–770.

• Browning, T.R. and Ramasesh, R.V. (2015), “Reducing unwelcome surprises in project management”, Sloan Management Review, Vol. 56 No. 3, pp. 53-62.

• Cooper, R.G. (2008), “Perspective: The stage-gate® idea-to-launch process-update, what’s new, and nexgen systems”, Journal of Product Innovation Management, Vol. 25 No. 3, pp. 213-232.

• Damasio, A.R. (1994), Descartes’ Error: Emotion, Reason, and the Human Brain, New York, Putnam.

• Dane, E. and Pratt, M.G. (2007), “Exploring intuition and its role in managerial decision making”, Academy of Management Review, Vol. 32 No. 1, pp. 33–54

• Diehl, E. and Sterman, J.D. (1995), “Effects of feedback complexity on dynamic decision making”, Organizational Behavior and Human Decision Processes, Vol. 62 No. 2, pp. 198–215.

• Dreyfus, H. and Dreyfus, S. (2005), “Expertise in real world contexts”, Organization Studies, Vol. 26, pp. 779–792.

• Eisenhardt, K.M. and Tabrizi, B.N. (1995), “Accelerating Adaptive Processes: Product Innovation in the Global Computer Industry”, Administrative Science Quarterly, Vol. 40, pp. 84–110.

• Fenton-O’Creevy, M., Soane, E., Nicholson, N. and Willman, P. (2011), “Thinking, feeling and deciding: The influence of emotions on the decision making and performance of traders”, Journal of Organizational Behavior, Vol. 32 No. 8, pp. 1044–1061.

• Forgas, J.P. and Ciarrochi, J.V. (2002), “On managing moods: Evidence for the role of homestatic cognitive strategies in affect regulation”, Personality and Social Psychological Bulletin, Vol. 28, pp. 336–345.

• Gonzalez, C. and Quesada, J. (2003), “Learning in dynamic decision making: The recognition process”, Computational & Mathematical Organization Theory, Vol. 9, pp. 287–304.

• Gonzalez, C., Lerch, J.F. and Lebiere, C. (2003), “Instance-based learning in dynamic decision making”, Cognitive Science, Vol. 27 No. 4, pp. 591–635.

• Kahneman, D. (2003), “A perspective on judgment and choice”, American Psychologist, Vol. 58, pp. 697–720.

• Khatri, N. and Ng, H.A. (2000), “The role of intuition in strategic decision making”, Human Relations, Vol. 53, pp. 57–86.

• Kloppenborg, T.J. and Tesch, D. (2015), “How executive sponsors influence project success”, Sloan Management Review, Vol. 56 No. 3, pp. 27-30.

• Koehler, D.J., Brenner, L. and Griffin, D. (2002), “The calibration of expert judgment: Heuristics and biases beyond the laboratory.” In T. Gilovich, D. Griffin, & D. Kahneman (Eds.), Heuristics and biases: The psychology of intuitive judgment, pp. 686–715, New York, Cambridge University Press.

• Lapré, M.A. and Van Wassenhove, L.N. (2003), “Managing learning curves in factories by creating and transferring knowledge”, California Management Review, Vol. 46 No. 1, pp. 53-71.

• Laufer, A., Hoffman, E.J., Russell, J.S. and Cameron, W.S. (2015), “What successful project managers do”, Sloan Management Review, Vol. 56 No. 3, pp. 43-51.

• Levinthal, D.A. and March, J.G. (1993), “The Myopia of Learning”, Strategic Management Journal, Vol. 14, pp. 95-112.

• Oliver, D. and Roos, J. (2005), “Decision-Making in High-Velocity Environments: The Importance of Guiding Principles”, Organization Studies, Vol. 26 No. 6, pp. 889-913.

• Schoemaker, P.J.H. and Day, G.S. (2009), “How to make sense of weak signals”, Sloan Management Review, Vol. 50 No. 3, pp. 81-89.

• Schwarz, N. and Clore, G.L. (1983), “Mood, misattribution and judgments of well-being: Informative and directive functions of affective states”, Journal of Personality and Social Psychology, Vol. 45, pp. 513–523.

• Sengupta, K., Abdel-Hamid, T.K. and Van Wassenhove, L.N. (2008), “The experience trap” Harvard Business Review, Vol. 86 No. 2, pp. 94-.

• Seo, M.G. and Barrett, L.F. (2007), “Being emotional during decision making – good or bad? An empirical investigation”, Academy of Management Journal, Vol. 50 No. 4, pp. 923-940.

• Simon, H. (1991), “Bounded rationality and organizational learning”, Organization Science, Vol. 2 No. 1, pp. 125-134.

• Sleesman, D.J., Conlon, D.E., McNamara, G. and Miles, J.E. (2012), “Cleaning up the big muddy: A meta-analytic review of the determinants of escalation of commitment”, Academy of Management Journal, Vol. 55 No. 3, pp. 541-562.

• Staats, B.R., KC, D.S. and Gino, F. (2015), “Blinded by experience: Prior experience, negative news and belief updating”, Harvard Business School, working paper 16-015.

• Staw, B.M. (1981), “The escalation of commitment to a course of action”, Academy of Management Review, Vol. 6 No. 4, pp. 577-587.

• Sting, F.J., Loch, C.H. and Stempfhuber, D. (2015), “Accelerating projects by encouraging help”, Sloan Management Review, Vol. 56, No. 3, pp. 33-41.

• Thompson, L., Gentner, D. and Loewenstein, J. (2000), “Avoiding missed opportunities in managerial life: Analogical training more powerful than individual case training”, Organizational Behavior and Human Decision Processes, Vol. 82 No. 1, pp. 60–75.

• Tsai, C.I., Klayman, J. and Hastie, R. (2008), “Effects of amount of information on judgment accuracy and confidence”, Organizational Behavior and Human Decision Processes, Vol. 107 No. 2, pp. 97–105.