In the aftermath of the initial AI tidal wave that has rolled across business and industry, there are signs that the emphasis may now be shifting from how best to apply the technology to how best to demonstrate its trustworthiness.

The term “artificial intelligence” (AI) was coined more than 50 years ago at Dartmouth College for a workshop proposal which defined AI as “the upcoming science and engineering of making intelligent machines”1. Since then, the field has experienced ups and downs, but re-emerged 10 years ago along with the groundbreaking advances in deep learning, and now the deployment of generative adversarial networks (GAN) , variational auto-encoders, as well as transformers (Vaswani et al., 2017; Haenlein and Kaplan, 2019).

The crux

With all the surrounding buzz, the many benefits of AI may arouse suspicion in some. More appropriately, Gartner’s Hype Cycle may suggest that we are entering a phase of “exaggerated expectations”. In any case, AI’s benefits must be balanced against new social, trust, and ethical challenges.

As for every technology (McKnight, et al., 2011), its pervasive use will only happen if it is beneficial to society, rather than being used dangerously or abusively. From beneficial AI to responsible AI (Wiens et al., 2019), ethical AI (Floridi et al., 2018), trustworthy AI ( EU Experts Group2), or explainable AI (Hagras, 2018), the variants in the terminology still remind us that one needs foundational trust for AI to thrive (Rudin, 2019).

ethical challenges.

A glimpse at the journey

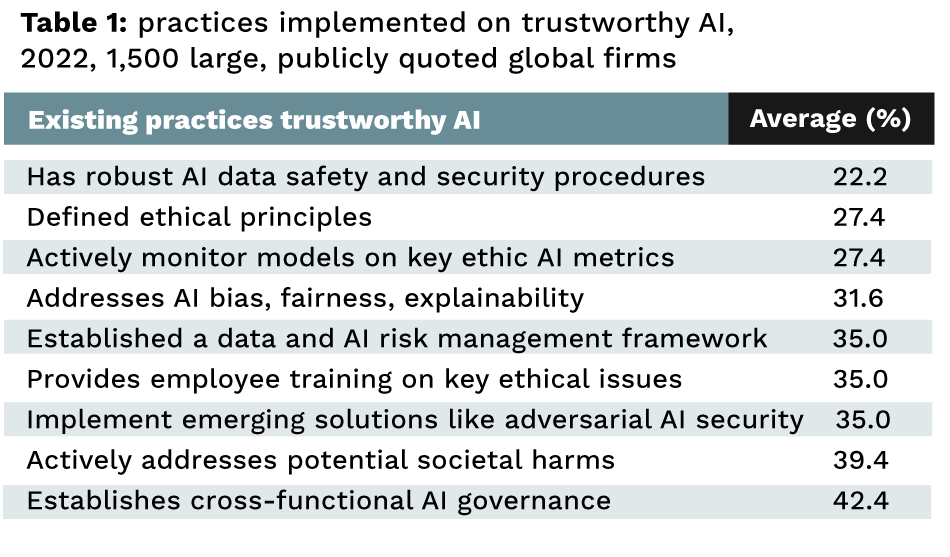

Even if getting such principles to work in practice is complex, the merit of businesses doing this translation right will be a win-win for society and their business goals. We recently worked with a major consultancy to assess how large, mostly publicly quoted global companies build their AI journey, including how they have launched organisational trustworthy practices.

Our sample is rather unique, as it is composed of more than 1,500 firms across 10 major countries, including the US, China/India, the top European countries, Brazil, South Africa, and beyond the usual suspects of high-tech companies. Five messages stand out.

- Companies are not standing still. About 80 per cent of companies have launched some form of trustworthy AI.

- Companies are, however, rarely embracing a full range of practices that are the backbone of trustworthy AI. On average, firms use four out of 10 organisational practices reviews, and only 3 per cent of companies are embracing all the practices.

- Among the practices considered, none has been deployed mainstream yet (table 1).

- Country and sector seem to be playing a role in the degree of operationalisation. Europe lags in comparison with Asia (India and Japan), Canada leads over the US, and high tech is not that far ahead of other sectors.

- Operationalisation of trustworthy AI is a top priority for about 30 per cent of companies, not necessarily to further expand their efforts, but to catch up on their peers.

Learning from leaders

There is to date no visible correlation between practices for responsible AI and return on investment of AI, whether one looks at revenue/investment , or AI project payback.

However, looking at the top 10 per cent of companies versus the 30 per cent not yet operationalising trustworthy AI, a glimpse of momentum is visible. Trustworthy AI is more widely implemented and, with more priority over other actions, the more firms have exploited the use of AI, the more they have spent on it. Judging by this process it appears that trustworthy AI is being implemented after AI diffusion. The next evolution to see is that trustworthy AI is a condition of good practice of AI transformation.

About the Author

Jacques Bughin is CEO of machaonadvisory and a former professor of Management who retired from McKinsey as senior partner and director of the McKinsey Global Institute. He advises Antler and Fortino Capital, two major VC/PE firms, and serves on the board of a number of companies.

Jacques Bughin is CEO of machaonadvisory and a former professor of Management who retired from McKinsey as senior partner and director of the McKinsey Global Institute. He advises Antler and Fortino Capital, two major VC/PE firms, and serves on the board of a number of companies.

- https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

- Ethics guidelines for trustworthy AI | Shaping Europe’s digital future (europa.eu)

- Bughin, J. (2023a). “To ChatGPT or not to ChatGPT: A note to marketing executives”, Applied Marketing Analytics

- • Bughin, J. (2023b) . “How productive is generative AI?”, Medium, available at: https://blog.gopenai.com/how-productive-is-generative-ai-large-under-the-right-setting-29702eb2de89

- Floridi, L., & Cowls, J. (2019). “A unified framework of five principles for AI in society”, Harvard Data Science Review, 1(1), 1-15

- Haenlein, M., & Kaplan, A. (2019). “A brief history of artificial intelligence: On the past, present, and future of artificial intelligence”, California Management Review, 61(4), 5-14

- Hagras, H. (2018). “Toward human-understandable, explainable AI” Computer, 51(9), 28-36.

- McKnight, D.H., Carter, M., Thatcher, J.B., & Clay, P.F. (2011). “Trust in a specific technology: An investigation of its components and measures”, ACM Transactions on management information systems (TMIS), 2(2), 1-25.

- Floridi, L., & Cowls, J. (2019). “A unified framework of five principles for AI in society”, Harvard Data Science Review, 1(1), 1-15

- Noy, S. and Zhang, W. (2023). “Experimental evidence on the productivity effects of generative artificial intelligence”, available at SSRN 4375283.

- Thiebes, S., Lins, S. & Sunyaev, A. (2021). “Trustworthy artificial intelligence”, Electronic Markets, 31, 447-64 (2021).

- Rudin, C. (2019). “Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead”, Nature Machine Intelligence, 1(5), 206-15.

- Thiebes, S., Lins, S. & Sunyaev, A (2021). “Trustworthy artificial intelligence”, Electronic Markets, 31, 447-64 (2021).

- Wiens, J., Saria, S., Sendak, M., Ghassemi, M., Liu, V.X., Doshi-Velez, F., et al. (2019). “Do no harm: A roadmap for responsible machine learning for health care”, Nature Medicine, 25(9), 1337-40