As organisations tap into the power of Generative Artificial Intelligence to improve business outcomes, it is imperative to examine how it affects their operating models. Only by doing this will companies leverage the power of AI while avoiding its pitfalls.

With the weekly drumbeat of Generative AI advancements and corporate leaders signalling the need for their organisations to make progress in harnessing the power of AI, larger questions are emerging for these same executives to address.

In addition to the ethical challenges that AI presents for their customers, employees, and society, companies must grapple with how AI will fundamentally shift their operating model including the workforce they employ today.

Ignoring the seismic shifts brought about by AI, and in particular, Large Language Models (LLMs) is no longer a viable option for organisations.

The torrent rise of AI, championed by industry titans such as OpenAI, Google, Meta, Microsoft, and Nvidia, is rapidly reshaping how work gets done and how companies operate and deliver value to their customers and shareholders.

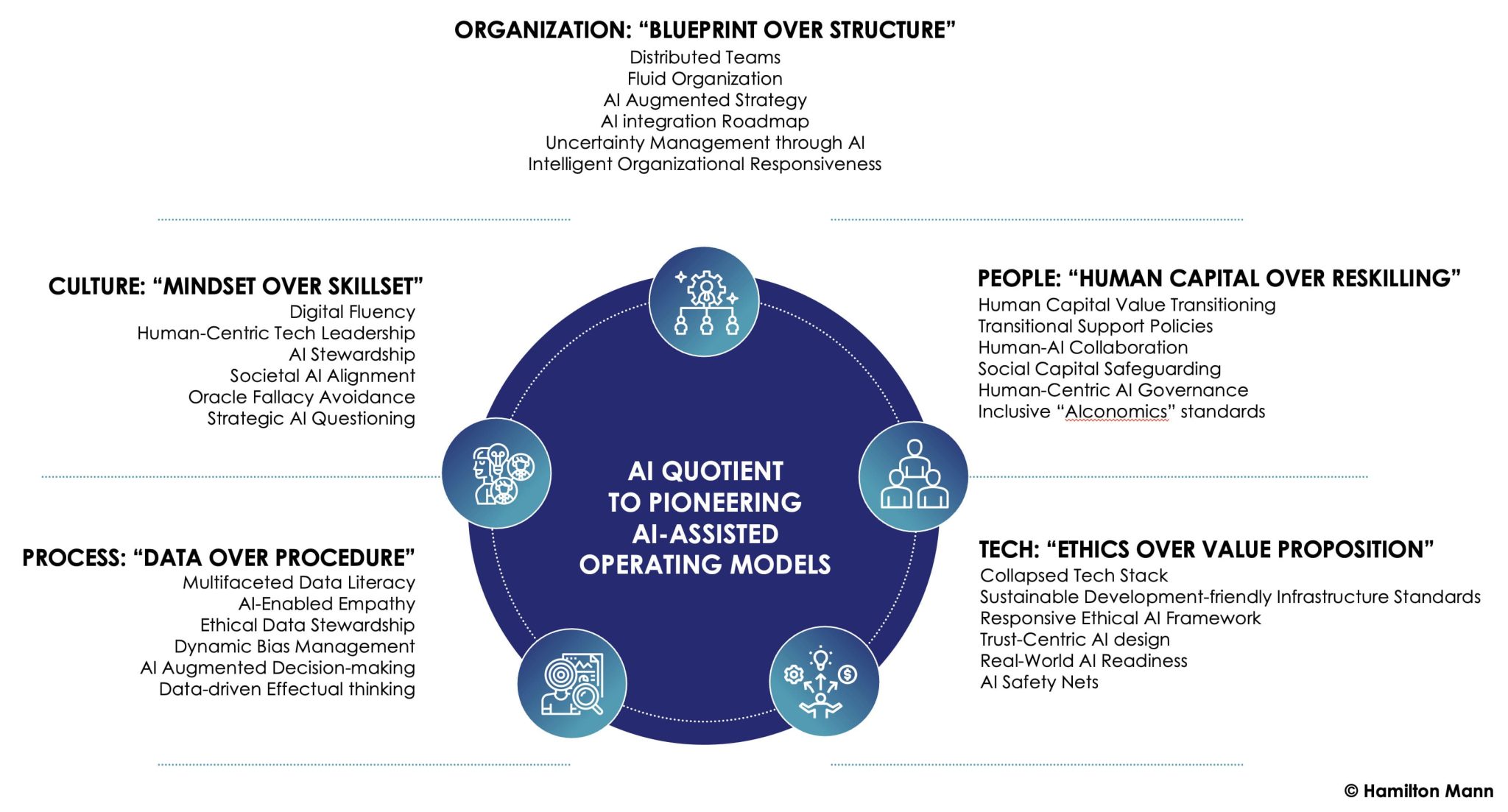

Let’s explore the key underlying components of organisations’ operating models—namely, organisational structure, people, processes, technology, and culture—while shaping the informal and often unwritten mechanisms that capture their essence and are most profoundly transformed by AI’s influence.

Organisation: Blueprint over Structure

In an era dominated by rapid AI advancements, it’s crucial to assess the impact on organisations from the holistic perspective of an organisational blueprint, rather than merely an organisational structure.

The forward-looking and comprehensive nature of a blueprint, designed for adaptability, offers a more inclusive approach that anticipates future changes and seamlessly integrates AI’s transformative potential into the very fabric of an organisation’s operations and strategy.

Preparing an organisation for AI is less a matter of stringent modification and more a journey towards a fluid organisation. A rigid adaptation approach tends to breed complacency and a clinging to the status quo in a defensive posture, often arising from an innate human need for stability.

Conversely, fluid organisation involves crafting a target model that provides a sense of reliability in handling 80% of predictable events, while remaining flexible enough to navigate the 20% of unforeseen challenges.

AI can lend unprecedented proficiency in managing these unpredictable elements, simultaneously elevating the performance within that 80% of predictable events.

The primary concern lies in establishing a dynamic organisational structure that optimises efficiency in addressing these regular tasks, allowing AI to enhance this productivity while maximising agility in responding to the organisation’s blind spots.

Let’s not merely ask how to introduce AI into organisations. Instead, let’s question how to transform organisations and reshape an understanding of what’s possible with AI.

Culture: Mindset over Skillset

The digital realm may seem daunting, requiring a profound understanding of data, technology, algorithms, and AI, but this is a misperception.

Amid the surge of digital technology evolution, leaders need to take steps towards dispelling the myth of digital omniscience, emphasising a more critical and discerning approach to digital understanding.

Rather than cultivating an army of data scientists and programmers, the focus should be on fostering a mindset that embraces the potential of these systems.

The transition from traditional processes to digital ones is a journey of exploration—embracing change, questioning the status quo, and learning to consider the implications of AI—all while understanding that the goal is not mastery, but fluency in concepts such as system architecture, AI agents, cybersecurity, and data-driven experimentation.

Moreover, it’s imperative to acknowledge that AI, no matter how advanced, is more than just a tool.

Unlike other technological tools, AI has the capacity to learn, adapt, and even make decisions based on the data it receives. When referring to AI merely as a ”tool”, it’s essential to ensure its profound implications are not underestimated, given the unique form of intelligence it embodies.

Such a mindset could lead to significant oversights, thereby running the risk of undesirable consequences by overlooking the necessary precautions required for its deployment.

Blind reliance on them, or reliance without a clear sense of purpose or ethical considerations, must be the pitfall to avoid.

True leadership in the digital age isn’t about tech prowess but the ability to integrate technology meaningfully into broader objectives, ensuring it aligns with human values and societal positive impact.

Leaders must ensure that the organisation pushes itself and is constantly challenged intrinsically by its mode of operation. AI must be approached not as an infallible oracle but as a powerful ally that, when used with discernment, can amplify human capacities.

Lastly, it’s essential to understand that the very essence of any digital technology is its evolutionary nature. What may be a groundbreaking innovation today could become obsolete tomorrow. Relying solely on the technical know-how of the present might lead to the trap of short-sightedness.

Leaders should, therefore, instill a culture of continuous learning, flexibility, and adaptability.

Embracing digital technology also means acknowledging its impermanence and the need to be proactive rather than reactive. Hence, this is not just about understanding the current AI capability but anticipating the ones yet to come, ensuring not just keeping pace with what is available but also taking steps ahead, shaping its very trajectory.

Process: Data over Procedure

In the realm of AI, data stands as a centerpiece, evolving platforms, tools, and systems, facilitating greater efficiency and improved service delivery.

As AI begins to take on an increasingly dominant role in decision-making, a critical challenge has emerged: understanding the labyrinthine data-driven process of AI’s reasoning for the sake of trustworthiness.

Let’s move beyond the perfectionism of causality that leads to linear and procedure-thinking to embracing the pragmatism of effectuality.

Embracing practices like highlighting relevant data sections contributing to AI outputs or building models that are more interpretable could enhance AI transparency. But is transparency the only antidote to the trust issues with AI? Or could there be a different approach that not only explains AI’s decisions but also anticipates its consequences?

Leaders must come to terms with the uncomfortable truth that AI’s decision-making capabilities often far exceed human comprehension. However, AI can help leaders understand in detail the associated effects of AI’s decision-making capabilities.

For instance, AI could simulate various scenarios to illustrate the potential outcomes of its recommendations. This way, AI can be used to understand the breadth and depth of its own impact. In a medical setting, AI might recommend a certain treatment plan. Anticipating the consequences means understanding how this treatment could affect the patient’s health outcomes, taking into consideration the individual’s unique medical history and circumstances.

It is also worth mentioning that AI’s effectiveness is heavily influenced by the data it processes, forget about an unbiased dataset as the magic bullet to address biases. There will always be biases in datasets, as data are originally produced by humans and the process of refining them involves humans again.

Embracing the intrinsic nature of bias in datasets is a challenge that can lead to more accurate and adaptable AI models. This is achieved by recognising that total neutrality is a myth and integrating a diverse range of data to ensure AI models can respond to various contexts.

It’s not only about who curates the data.

While it’s beneficial to involve diverse teams in data collection and processing, overemphasis on representation might lead to enforced uniformity, suppressing the rich and natural variations in human expression and experience. Instead, a more balanced approach would allow AI models to learn and adapt from the organic nature of data, including biases, to respond more genuinely to different perspectives.

Finally, let’s rethink the trade-offs of large datasets vs small datasets.

The pursuit of larger AI systems by tech companies is not merely a race towards volume. Larger datasets encompass broader knowledge, mirroring the vast spectrum of human perspectives.

Reducing the size of a model for the sake of better understanding might, in fact, diminish the depth and richness of insights it can provide.

No matter how meticulously AI is developed and documented, it can never fully grasp the depth of human experiences and biases, leading to inadvertent harm.

Hence, the strategy shouldn’t be to eradicate bias but to acknowledge and manage it, reducing the risks that are associated while enabling us to navigate complex human biases and patterns effectively.

AI will only be truly powerful when it can navigate the complex, bias-ridden real world. That will only be achieved while appreciating the multifaceted nature of data and developing AI models that can recognise and adapt to these complexities that are in essence not always “procedurable”.

People: Human Capital Value transitioning over Reskilling

Leaders need to face the new or exacerbated Human Capital challenge AI poses.

The emergence of AI necessitates new skill sets and competencies, redefining what expertise is essential for delivering value in this new “AIconomic” era.

But it goes beyond that.

The prospect of AI triggering mass unemployment is often overshadowed by optimistic predictions based on historical technological revolutions. It is imperative, however, to examine AI’s impact not through the lens of the past, but in the context of its unique capabilities.

For instance, the transition from horse-and-buggy to automobiles indeed reshaped job markets but it did not render human skills redundant. AI, on the other hand, has the potential to do just that.

Contrary to the belief that AI should not create meaningful work products without human oversight, the use of AI in tasks like document generation can result in increased efficiency. Of course, human oversight is important to ensure quality, but relegating AI to merely auxiliary roles might prevent us from fully realising its potential.

Take Collective[i]’s AI system for instance. Yes, it may free up salespeople to focus on relationship building and actual selling, but it could also lead to a reduced need for human personnel, as AI handles an increasingly larger share of sales tasks. The efficiencies of AI could easily shift from job enhancement to job replacement, creating a precarious future for many roles.

Similarly, while OpenAI’s Codex may make programming more efficient, it could, in the long run, undermine the value of human programmers. As AI progresses, the line between “basic purposes” and more complex tasks will blur.

Certainly, investments in education and upskilling form a key part of any strategy to cope with job displacement due to the rise of AI. This includes fostering new-age skills that enable workers to adapt to the changing employment landscape and thrive in AI-dominated sectors.

However, this approach alone may not be sufficient.

It is imperative to also craft comprehensive social and economic policies that provide immediate relief and long-term support to those displaced by AI’s advancement.

Unemployment benefits, for instance, could be reevaluated and expanded to cater to AI-induced job losses.

Moreover, addressing AI displacement should not solely focus on financial security. The social and psychological impacts of job loss — including the loss of identity, self-esteem, and social networks — are equally significant and need to be factored into policy planning.

Social support services and career counselling could be made widely accessible to help individuals navigate the transition period.

A Human Capital Value Transitioning Analysis can effectively cushion the impact of AI-induced displacement and build a resilient and inclusive organisation from AI advancements while safeguarding its human capital.

Technology: Ethical stands over Value Proposition

AI introduces novel policies and standard needs, necessitating a reevaluation of decision-making protocols and organisational conduct.

But let’s not think that AI regulation will be enough to regulate AI.

The agile nature of AI evolution has outpaced the regulation meant to keep it in check. The burden of ensuring that AI tools are used ethically and safely thus rests heavily on the shoulders of the companies employing them.

The role of AI ethics watchdogs and regulation is crucial, but their effectiveness can be limited by the rapidly changing landscape of AI. Overly relying on the arrival of external checks and balances, or acting as if waiting for them to first take a stance before taking action, could lead to complacency within organisations.

It is thus essential for leaders to foster a culture of ethical AI development and usage, and not just depend on external watchdogs or regulations.

While government regulations are evolving to address AI, organisations should proactively ensure their AI applications are responsible, fair, and ethical.

It’s not just about reaping the benefits of AI but also about responsibly integrating these technologies without causing harm to stakeholders. This necessitates not only technological sophistication but also ethical mindfulness and societal understanding.

The example of Zoom — the popular video conferencing software — which recently made headlines, raising concerns about an update to their terms-of-service that allows the company to use customer data to train its artificial intelligence, illustrates this.

The path to responsible AI deployment is less about waiting for appropriate regulations and more about fostering a deep understanding and ethical use of the technology.

Pioneering AI-Driven Operating Models

By moving to an AI-ready Operating Model, organisations will need to chart their own AI-transformation journey by prioritising an adaptive blueprint over structure, emphasising mindset more than just skillset, valuing data above procedure, placing emphasis on the transition of human capital value instead of just reskilling, and elevating ethical stances above traditional value propositions.

To navigate this multi-dimensional transformation effectively, organisations would benefit from a structured approach to assess their readiness and progress in developing their Operating Model’s ‘‘AI Quotient’’.

Companies that can quickly evolve towards these dimensions will begin to separate themselves from the pack in their respective industries in terms of the speed and impact of AI-driven innovations.

AI is like no other tech wave in history with the potential to empower employees, reimagine work, and shift how companies deliver value in leaps versus incremental steps. Similarly, it requires a radical approach to transforming the operating model to unlock its full potential.

As organisations shift towards an AI-ready Operating Model, they must design their unique AI-transformation path. This means prioritising flexibility over fixed structures, focusing on mindset beyond just skills, valuing data over traditional procedures, emphasising the evolution of human capital value rather than mere reskilling, and prioritising ethical considerations over conventional value propositions.

About the Author

Hamilton Mann is the Group VP of Digital Marketing and Digital Transformation at Thales. He is also the President of the Digital Transformation Club of INSEAD Alumni Association France (IAAF), a mentor at the MIT Priscilla King Gray (PKG) Center, and Senior Lecturer at INSEAD, HEC and EDHEC Business School.